The complete CI/CD Setup (Part 3 - The first Continuous Integration and Deployment pipeline)

In this series of blog posts I’ll describe how to setup on a small environment a complete Continuous Integration and Continuous Deployment pipeline, which components are required and how to deploy them and how to make this a bit more general for additional applications.

- In the first part I’ve made an overview and described the components that I’ll deploy.

- In the second part we have deployed the components that are required for the pipeline.

- In this part we will now configure Jenkins and Gitea for a CI/CD workflow and start developing the pipeline.

Configure Gitea

The configuration of Gitea is very easy and requires at the beginning only one step. Please open Gitea in the browser and click on Register on the right side, the first user of Gitea will be automatically the administrator. After adding the first user you are automatically logged in.

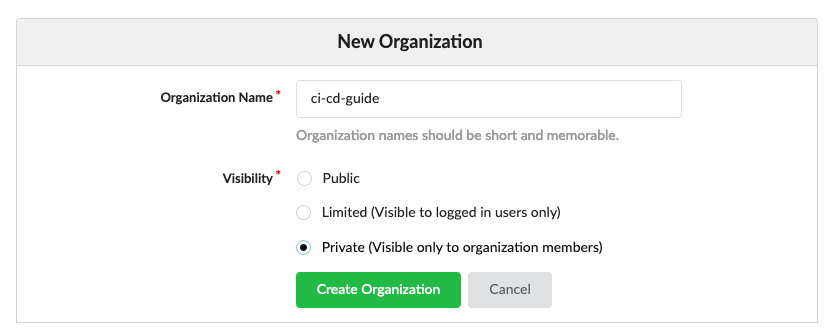

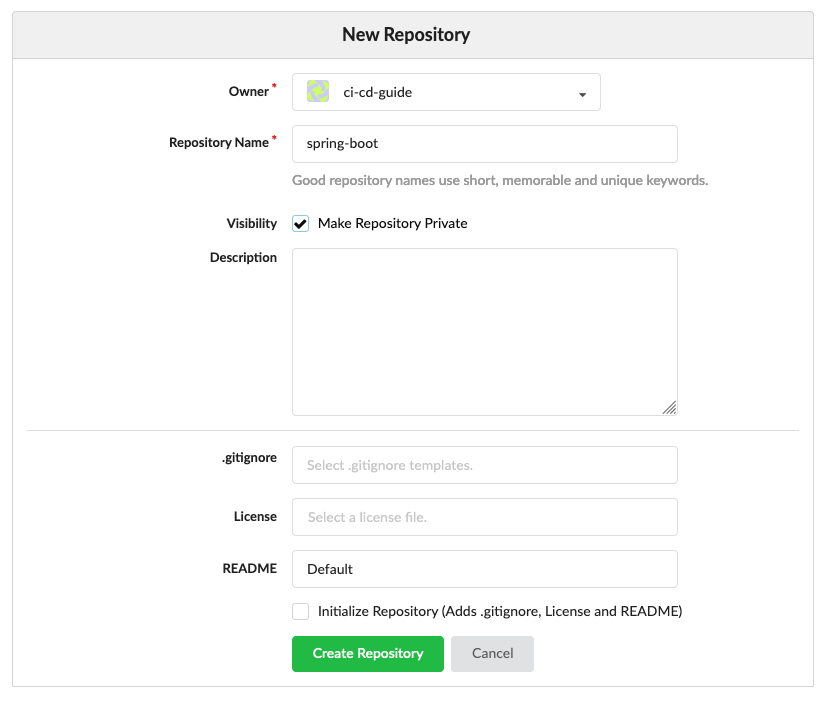

Now we can create our first Gitea Organization, therefore we can click on the plus icon on the right side of the top navigation and then on “New Organization”.

Based on a decision how the visibility of the new organization should be and how the organization name is you can create it now. In it we can create then our first Repository, therefore we can click again on the right side of the top navigation on the plus icon and now on “New Repository”. Here we configure the Organization where the Repository should be placed in and the name of the Repository. You can configure again the Visibility of the project and some additional parameters. After you have finished it you can click on Create Repository and the repository is created and your browser shows the new repository and the things that are required to clone it.

Configure Jenkins

Now lets configure Jenkins, first we login with username “admin” and password we received from Kubernetes secret:

printf $(kubectl get secret --namespace jenkins jenkins -o jsonpath="{.data.jenkins-admin-password}" | base64 --decode);echo

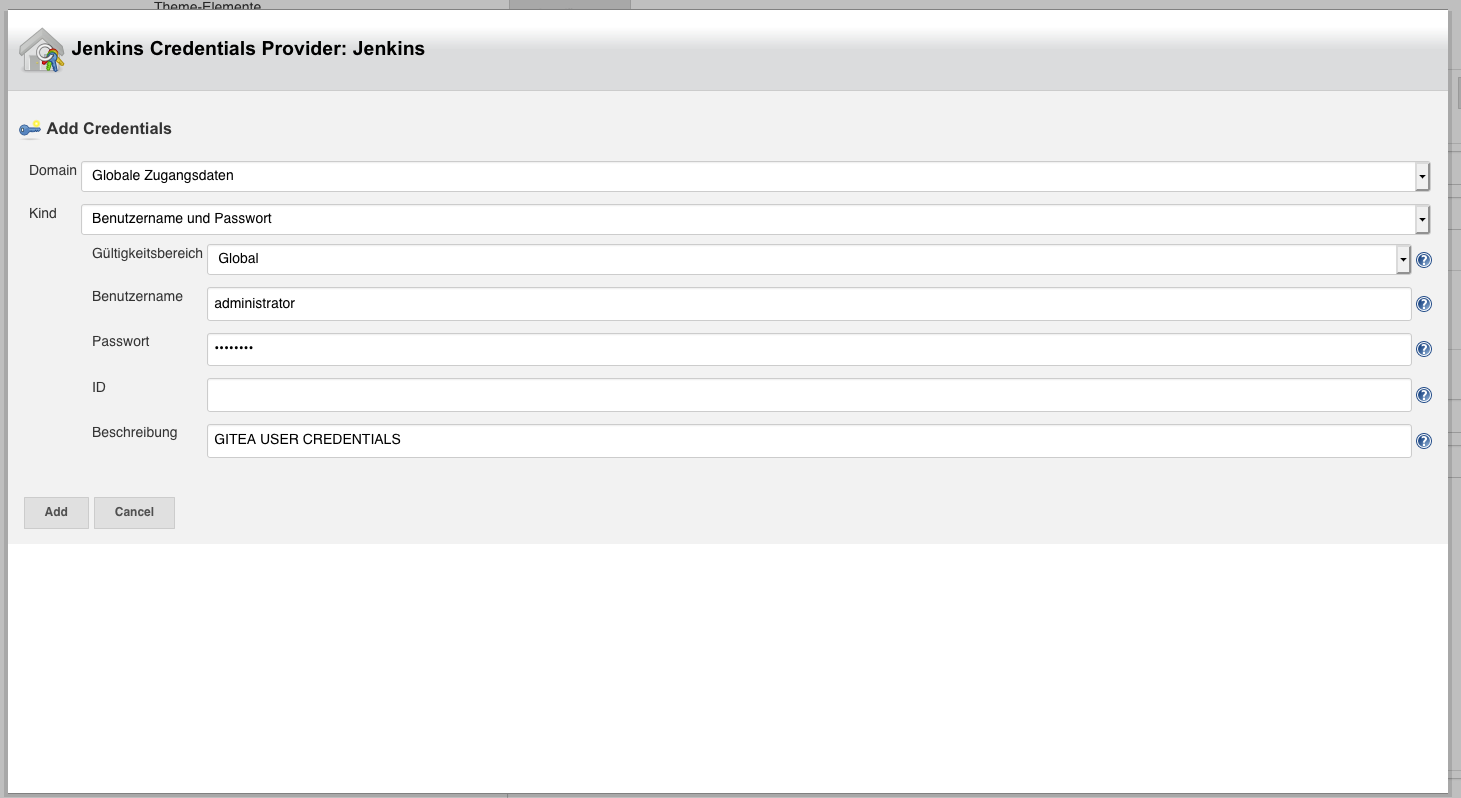

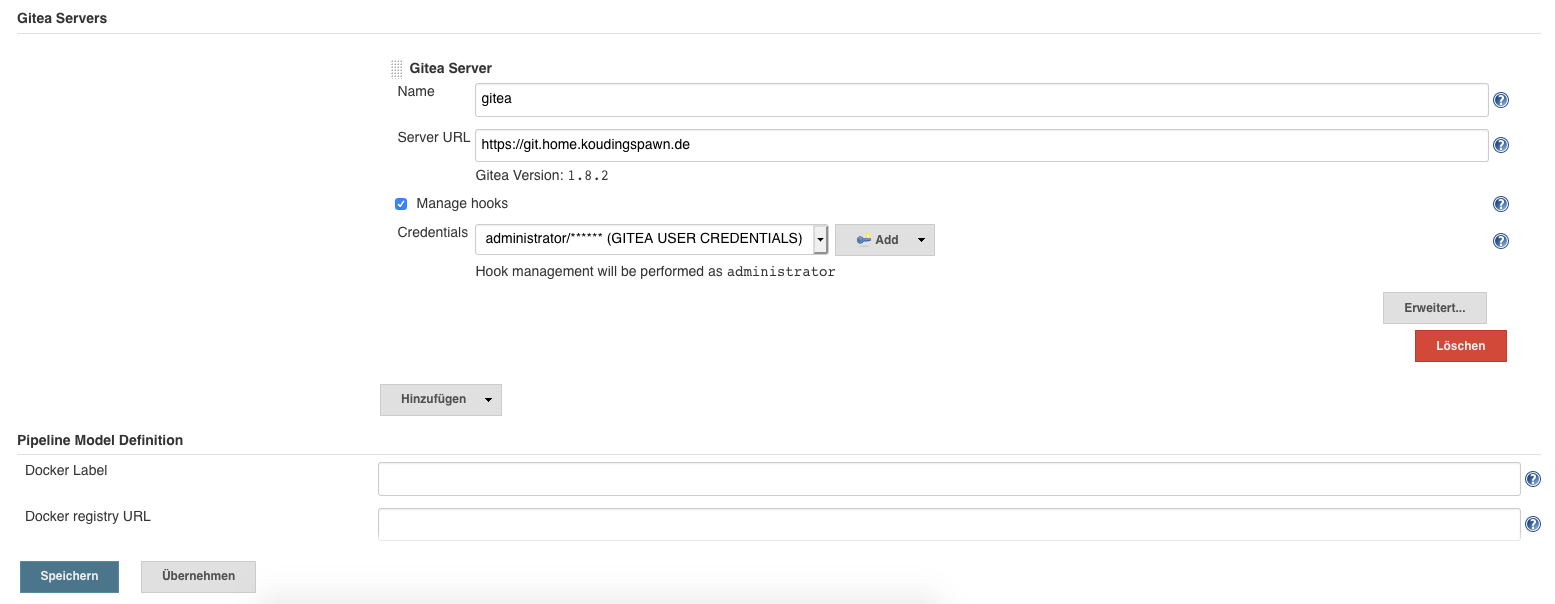

After this we can click on Manage Jenkins and then on System Configuration. There we find an area called “Gitea Servers” and inside we can click on Add, to add a new connection to a Gitea server. Next we configure the name of the server, the server URL and select “Manage hooks” check box. After this we add new Credentials, you can create a different User for Jenkins to Gitea connection or simply use the administrator (thats not very secure).

Then we can select this credentials in the System Configuration menu and click on save to finish the Jenkins to Gitea Server configuration.

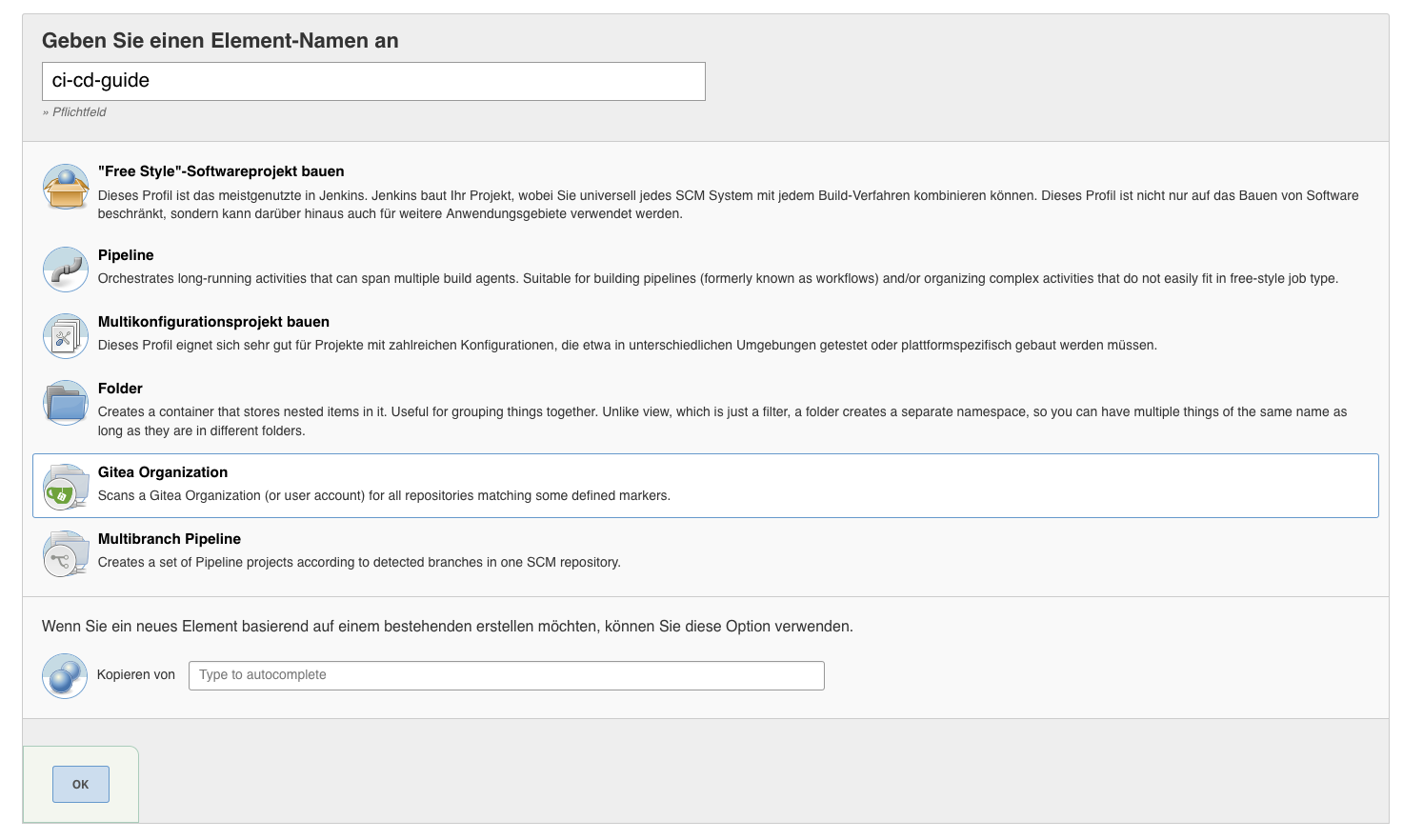

Next we can create our first CI/CD pipeline, by simply clicking in the Jenkins main Menu on “New Item”, this will open a Dialog, where we write a name for this item and select “Gitea Organization” as Project type.

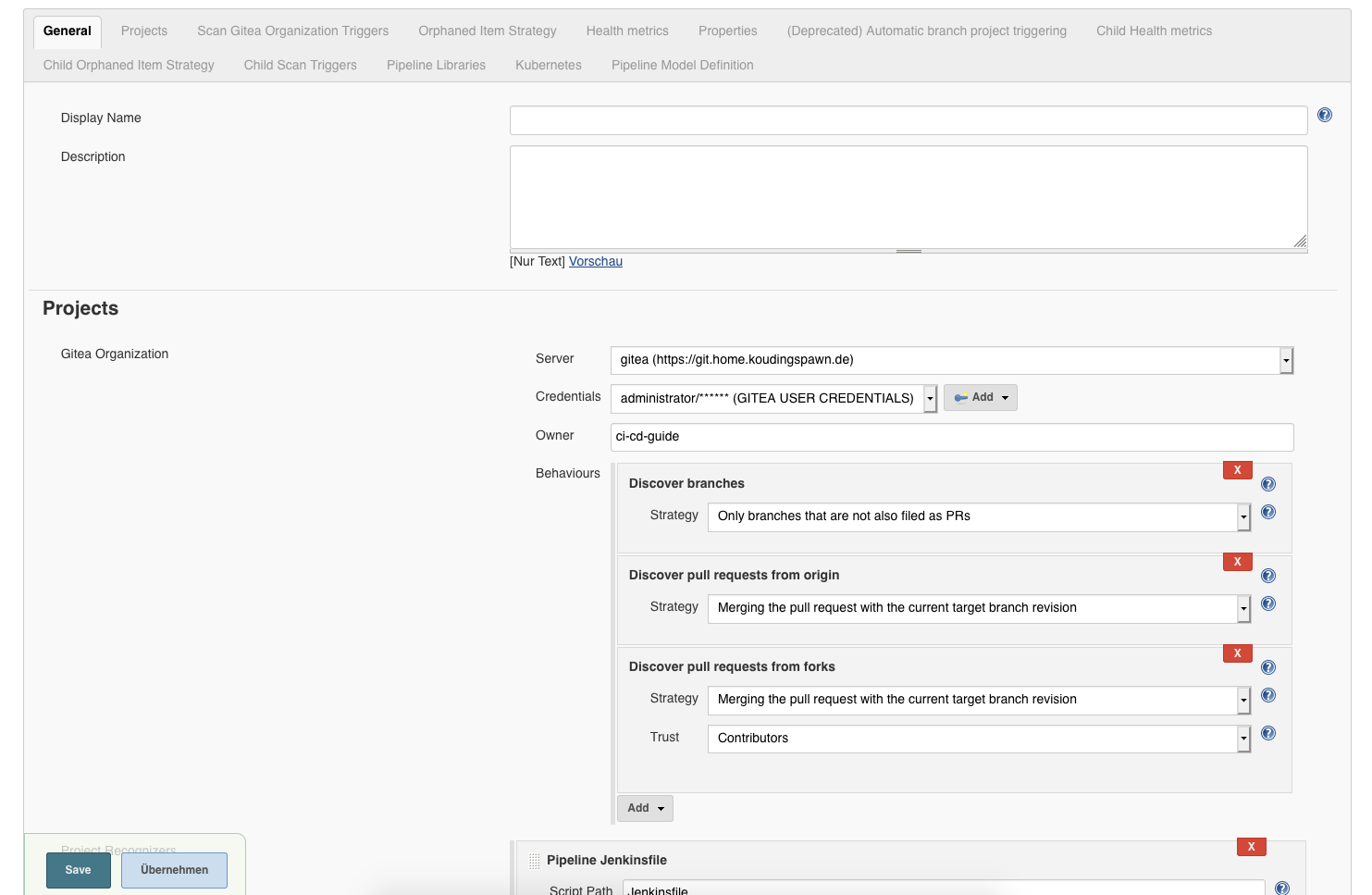

After we click on “OK” a new Dialog inside the Project will open and we can configure the Gitea Server connection and the credentials. The last required step here is to write the name of the Organization we created in the Gitea step before into the Owner field:

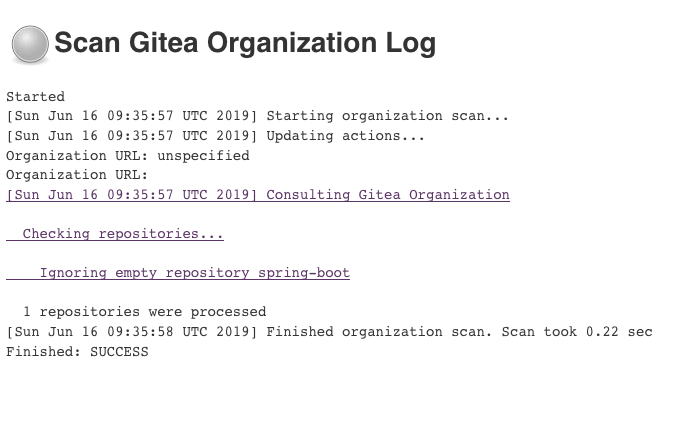

Now Jenkins will scan our Repositories available in this organization for Projects with Jenkinsfile.

Develop our first pipeline for CI / CD

After all the configuration we are ready to develop our first Continuous Integration and Continuous Deployment pipeline in Jenkins. Therefore we push a small application into our previously generated Gitea Repository. This application calculates based on a source address and a destination address a fictional price for the transport to this location. To let it run correctly it is required to add an Api Key to the application, because the coordinates of the location and the distances are calculated via https://openrouteservice.org.

After you have registered you can receive an API Key and store it inside Vault, then Vault-CRD will inject the secret during deployment as secret and a pod can inject it as environment variable. This allows Spring Boot to load the API Key and it is not required to store it inside a Git repository:

vault write kubernetes-secrets/demo-app apikey=<apikey>

The last step is to clone the project and push it to Gitea:

git clone https://github.com/DaspawnW/complete-ci-cd-spring-boot.git

cd complete-ci-cd-spring-boot

git checkout -t origin/clean-project

git remote add gitea https://git.home.koudingspawn.de/ci-cd-guide/spring-boot.git

git push gitea clean-project:master

Build the project

Now we can start to write our Jenkinsfile, this tells Jenkins what to do in this project. Jenkins as deployed by the helm chart in the previous part is configured to use Kubernetes Pods to execute the steps. First we specify the required containers, in this case maven 3.6 with Java JDK 11. Then we specify in node(label) to execute this job inside this pod and checkout the project. After this we simply run mvn -B clean install inside the maven container to build the project.

def label = "ci-cd-${UUID.randomUUID().toString()}"

podTemplate(label: label,

containers: [

containerTemplate(name: 'maven', image: 'maven:3.6-jdk-11-slim', ttyEnabled: true, command: 'cat')

]) {

node(label) {

stage('Checkout project') {

def repo = checkout scm

container('maven') {

stage('Build project') {

sh 'mvn -B clean install'

}

}

}

}

}

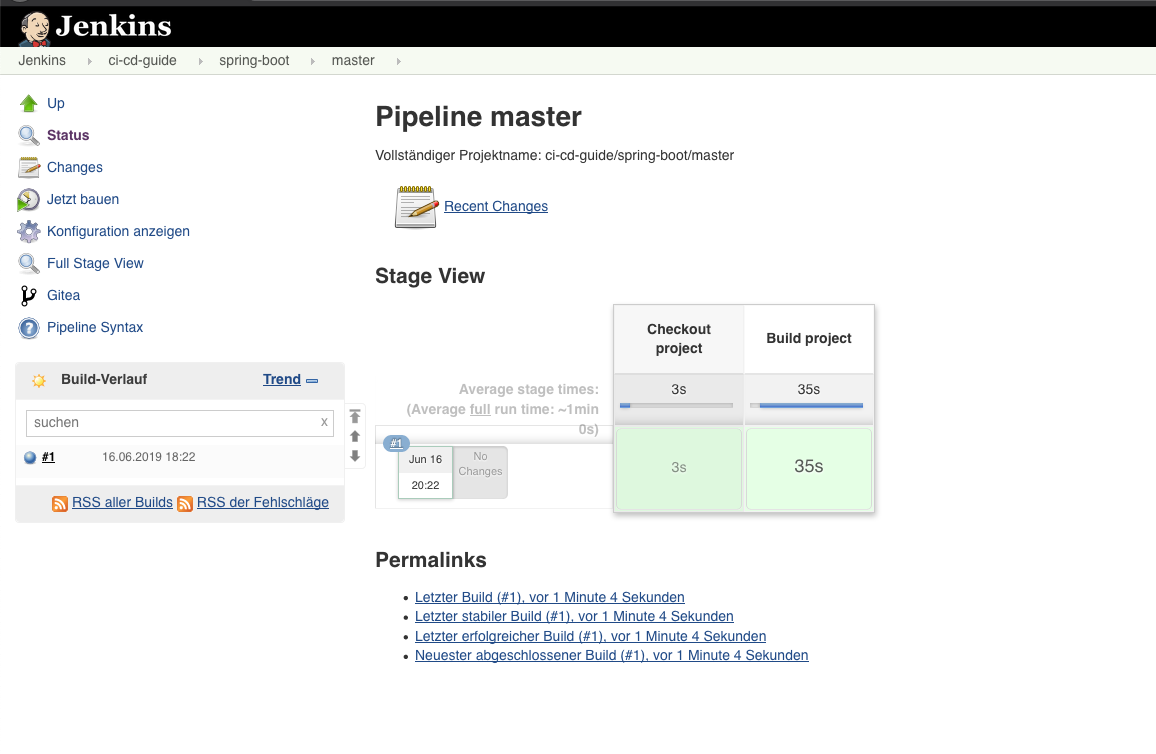

When you push this changes you should see, that Jenkins Builds the project in the Organization based Project:

Build and Push the Docker image

The next step is to build the project as Docker image and push it to the Registry deployed in Part 2 of the series. Therefore we can add a new container to the pod that contains docker and is mounted to our Hostsystem to get access to the docker.sock:

def label = "ci-cd-${UUID.randomUUID().toString()}"

podTemplate(label: label,

containers: [

containerTemplate(name: 'maven', image: 'maven:3.6-jdk-11-slim', ttyEnabled: true, command: 'cat'),

containerTemplate(

name: 'docker',

image: 'docker',

command: 'cat',

ttyEnabled: true,

envVars: [

secretEnvVar(key: 'REGISTRY_USERNAME', secretName: 'registry-credentials', secretKey: 'username'),

secretEnvVar(key: 'REGISTRY_PASSWORD', secretName: 'registry-credentials', secretKey: 'password')

]

)

],

volumes: [

hostPathVolume(hostPath: '/var/run/docker.sock', mountPath: '/var/run/docker.sock')

]) {

node(label) {

stage('Checkout project') {

def repo = checkout scm

def registryUrl = "registry.home.koudingspawn.de"

def dockerImage = "ci-cd-guide/spring-boot"

def dockerTag = "${repo.GIT_BRANCH}-${repo.GIT_COMMIT}"

container('maven') {

stage('Build project') {

sh 'mvn -B clean install'

}

}

container('docker') {

stage('Build docker') {

sh "docker build -t ${registryUrl}/${dockerImage}:${dockerTag} ."

sh "docker login ${registryUrl} --username \$REGISTRY_USERNAME --password \$REGISTRY_PASSWORD"

sh "docker push ${registryUrl}/${dockerImage}:${dockerTag}"

sh "docker rmi ${registryUrl}/${dockerImage}:${dockerTag}"

}

}

}

}

}

In Part 2 of the series we synchronised via Vault-CRD our registry-credentials with the jenkins namespace in Kubernetes. Now we can create our second container, the docker container, and and mount this registry credentials as environment variables. Thats the reason why the sh lines are a bit confusing, to prevent Jenkins from handling this variables, so the shell script can load them from the container environment variables. After commiting this you should see that Jenkins has build our project again successfuly and we are now able to deploy our first Version after a successful build.

Start deploying the application

To allow our Jenkins pipeline to do Continuous Deployment and deploy the newly generated Docker Image after each new push we first have to write a RBAC configuration. This RBAC configuration is required to allow our building pod to access helm to deploy the new version:

apiVersion: v1

kind: ServiceAccount

metadata:

name: helm

namespace: jenkins

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: tiller-user

namespace: kube-system

rules:

- apiGroups:

- ""

resources:

- pods/portforward

verbs:

- create

- apiGroups:

- ""

resources:

- pods

verbs:

- list

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: tiller-user-binding

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: tiller-user

subjects:

- kind: ServiceAccount

name: helm

namespace: jenkins

As you maybe have seen inside the code, there is already a helm chart part of the project. So now we can simply run helm to deploy our new version of application inside the Jenkinsfile:

def label = "ci-cd-${UUID.randomUUID().toString()}"

podTemplate(label: label,

serviceAccount: 'helm',

containers: [

containerTemplate(name: 'maven', image: 'maven:3.6-jdk-11-slim', ttyEnabled: true, command: 'cat'),

containerTemplate(

name: 'docker',

image: 'docker',

command: 'cat',

ttyEnabled: true,

envVars: [

secretEnvVar(key: 'REGISTRY_USERNAME', secretName: 'registry-credentials', secretKey: 'username'),

secretEnvVar(key: 'REGISTRY_PASSWORD', secretName: 'registry-credentials', secretKey: 'password')

]

),

containerTemplate(name: 'helm', image: 'alpine/helm:2.11.0', command: 'cat', ttyEnabled: true)

],

volumes: [

hostPathVolume(hostPath: '/var/run/docker.sock', mountPath: '/var/run/docker.sock')

]) {

node(label) {

stage('Checkout project') {

def repo = checkout scm

def dockerImage = "ci-cd-guide/spring-boot"

def dockerTag = "${repo.GIT_BRANCH}-${repo.GIT_COMMIT}"

def registryUrl = "registry.home.koudingspawn.de"

def helmName = "spring-boot-${repo.GIT_BRANCH}"

def url = "${helmName}.home.koudingspawn.de"

def certVaultPath = "certificates/*.home.koudingspawn.de"

container('maven') {

stage('Build project') {

sh 'mvn -B clean install'

}

}

container('docker') {

stage('Build docker') {

sh "docker build -t ${registryUrl}/${dockerImage}:${dockerTag} ."

sh "docker login ${registryUrl} --username \$REGISTRY_USERNAME --password \$REGISTRY_PASSWORD"

sh "docker push ${registryUrl}/${dockerImage}:${dockerTag}"

sh "docker rmi ${registryUrl}/${dockerImage}:${dockerTag}"

}

}

container('helm') {

stage("Deploy") {

sh("""helm upgrade --install ${helmName} --namespace='${helmName}' \

--set ingress.enabled=true \

--set ingress.hosts[0]='${url}' \

--set ingress.annotations.\"kubernetes\\.io/ingress\\.class\"=nginx \

--set ingress.tls[0].hosts[0]='${url}' \

--set ingress.tls[0].secretName='tls-${url}' \

--set ingress.tls[0].vaultPath='${certVaultPath}' \

--set ingress.tls[0].vaultType='CERT' \

--set image.tag='${dockerTag}' \

--set image.repository='${registryUrl}/${dockerImage}' \

--wait \

./helm""")

}

}

}

}

}

In the second line of podTemplate definition we are mounting the ServiceAccount “helm” to the pod, this allows our helm container to get access to Tiller. The most important part happens in the stage “Deploy” area. There we tell helm how to render our helm chart and how to deploy it. With wait we are telling helm to wait for a finished deployment.

After you commit this change you should see that the helm deployment happens after maven has build the project and it was pushed as new Docker Image to the Registry.

So the first part of Continuous Integration and Continuous Deployment is done. There is only one small stupid thing we should fix for now: When we push to another branch then the master branch our application gets also deployed and this maybe produces some not required deployments we can prevent. To do this we can add an if statement arround the Deploy stage:

def label = "ci-cd-${UUID.randomUUID().toString()}"

podTemplate(label: label,

serviceAccount: 'helm',

containers: [

containerTemplate(name: 'maven', image: 'maven:3.6-jdk-11-slim', ttyEnabled: true, command: 'cat'),

containerTemplate(

name: 'docker',

image: 'docker',

command: 'cat',

ttyEnabled: true,

envVars: [

secretEnvVar(key: 'REGISTRY_USERNAME', secretName: 'registry-credentials', secretKey: 'username'),

secretEnvVar(key: 'REGISTRY_PASSWORD', secretName: 'registry-credentials', secretKey: 'password')

]

),

containerTemplate(name: 'helm', image: 'alpine/helm:2.11.0', command: 'cat', ttyEnabled: true)

],

volumes: [

hostPathVolume(hostPath: '/var/run/docker.sock', mountPath: '/var/run/docker.sock')

]) {

node(label) {

stage('Checkout project') {

def repo = checkout scm

def dockerImage = "ci-cd-guide/spring-boot"

def dockerTag = "${repo.GIT_BRANCH}-${repo.GIT_COMMIT}"

def registryUrl = "registry.home.koudingspawn.de"

def helmName = "spring-boot-${repo.GIT_BRANCH}"

def url = "${helmName}.home.koudingspawn.de"

def certVaultPath = "certificates/*.home.koudingspawn.de"

container('maven') {

stage('Build project') {

sh 'mvn -B clean install'

}

}

container('docker') {

stage('Build docker') {

sh "docker build -t ${registryUrl}/${dockerImage}:${dockerTag} ."

sh "docker login ${registryUrl} --username \$REGISTRY_USERNAME --password \$REGISTRY_PASSWORD"

sh "docker push ${registryUrl}/${dockerImage}:${dockerTag}"

sh "docker rmi ${registryUrl}/${dockerImage}:${dockerTag}"

}

}

if ("${repo.GIT_BRANCH}" == "master") {

container('helm') {

stage("Deploy") {

sh("""helm upgrade --install ${helmName} --namespace='${helmName}' \

--set ingress.enabled=true \

--set ingress.hosts[0]='${url}' \

--set ingress.annotations.\"kubernetes\\.io/ingress\\.class\"=nginx \

--set ingress.tls[0].hosts[0]='${url}' \

--set ingress.tls[0].secretName='tls-${url}' \

--set ingress.tls[0].vaultPath='${certVaultPath}' \

--set ingress.tls[0].vaultType='CERT' \

--set image.tag='${dockerTag}' \

--set image.repository='${registryUrl}/${dockerImage}' \

--wait \

./helm""")

}

}

}

}

}

}

After the application is now deployed we maybe want to test if everything still works as expected, therefore we write in Part 4 of the series Postman tests, that we execute automatically after each deployment.