How Vault-CRD can help you

Secret management in Kubernetes is an interresting aspect. There are two main options how applications that require secrets can get access on them:

- The application communicates with a central service and receives the secrets during runtime

- The secrets are available in Kubernetes and the application assumes that the secrets are available as environment variables or as files

But how in case of option 2 you store the secrets in Kubernetes and how do you don’t expose them for example to your developers when you store them unencrypted inside your git repository. Especially GitOps as a best practice for managing applications on Kubernetes requires a way to sync secrets with Kubernetes without storing them unencrypted in Git.

I know that there are some solutions available that tries to solve the problem in a bit another way then I solved the problem for my work. One solution is storing the secrets encrypted in Git and they get decrypted during deployment. Another way is using an mutating webhook that replaces the environment variables during deployment with secrets. With Kubernetes 1.16 a new way gets available based on ephemeral volumes. But this one is still under development.

Especially if you have multiple different use-cases secret management can become tricky. Therefore I’ve developed Vault-CRD a small Operator that runs inside the Kubernetes Cluster and synchronizes secrets stored in HashiCorp Vault with Kubernetes Secrets. Therefore it authenticates itself to HashiCorp Vault and based on a Custom Resource Definition that describes the secrets that should be synchronized with Kubernetes it generates a secret in Kubernetes.

All the following use-case examples require an installation of Vault-CRD inside your Kubernetes Cluster. For more details please see the installation instructions: Install Vault-CRD

Synchronizing secrets from HashiCorp Vault to Kubernetes

The easiest one is synchronizing secrets that are stored in HashiCorp Vault with Kubernetes. Therefore we first save the secrets as KV-1 or KV-2 secrets in HashiCorp Vault:

## store secret in kv-1 mountpoint

$ vault write secret/usercredentials username=testuser password=verysecure

## store secret in kv-2 mountpoint

vault kv put versioned/github apitoken=123123123

After this we can apply our resource based on the Custom Resource Definition of Vault-CRD to Kubernetes:

## Synchronize a secret stored as KV-1 secret in HashiCorp Vault's path secret/test-secret with Kubernetes

apiVersion: "koudingspawn.de/v1"

kind: Vault

metadata:

name: usercredentials

spec:

type: "KEYVALUE"

path: "secret/usercredentials"

## Synchronize a secret stored as KV-2 secret in HashiCorp Vault's path versioned/example with Kubernetes

apiVersion: "koudingspawn.de/v1"

kind: Vault

metadata:

name: github

spec:

path: "versioned/github"

type: "KEYVALUEV2"

versionConfiguration:

version: 1

Now you should see that the secrets are synchronized with Kubernetes and available in it. In case a secret gets changed in HashiCorp Vault it also gets updated in Kubernetes.

$ kubectl get secret test-secret

NAME TYPE DATA AGE

usercredentials Opaque 2 12s

github Opaque 1 10s

Now you can use this secrets inside your application for example as environment variables:

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

...

spec:

...

spec:

...

containers:

- ...

env:

- name: USERNAME

valueFrom:

secretKeyRef:

name: usercredentials

key: username

- name: PASSWORD

valueFrom:

secretKeyRef:

name: usercredentials

key: password

- name: APITOKEN

valueFrom:

secretKeyRef:

name: github

key: apitoken

...

Using Property files

If we are talking about few secrets as environment variables it is very easy to sync them in such a way and to mount them as environment variables as valueFrom to our running pod/container. But what about multiple secrets like 10 or 15, how to sync them automatically?

Therefore a secret type called properties is available. This one makes it easy to generate secrets from templates and sync them as one time job with Kubernetes. Based on the two secrets created in the example above we can also create a secret property that contains all the variables:

apiVersion: "koudingspawn.de/v1"

kind: Vault

metadata:

name: properties

spec:

type: "PROPERTIES"

propertiesConfiguration:

files:

application.properties: |

github.apitoken={{ vault.lookupV2('versioned/github').get('apitoken') }}

database.username={{ vault.lookup('secret/usercredentials', 'username') }}

database.password={{ vault.lookup('secret/usercredentials', 'password') }}

Now we can use this rendered properties file for example with a Spring Boot 2 application:

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

...

spec:

...

spec:

...

containers:

- ...

env:

- name: SPRING_CONFIG_ADDITIONAL_LOCATION

value: /opt/properties/application.properties

volumeMounts:

- mountPath: /opt/properties

name: properties

volumes:

- name: properties

secret:

defaultMode: 420

secretName: properties

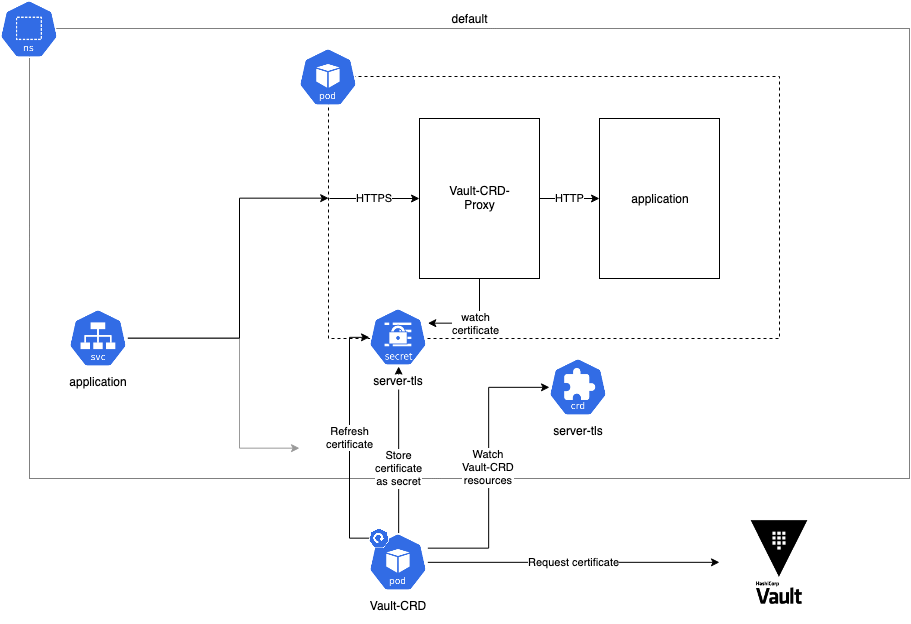

Write your own Proxy for in-cluster TLS encryption

Before Istio became so popular it was really hard to do TLS encryption in the cluster. And also today if you won’t use Istio for different reasons it is still not very easy to do this. One possible solution is mounting a TLS secret to your application and do encryption in the application. The biggest problem of this solution is, that certificates typically have only a short live time and must be refreshed. Sure you can handle this inside your application, but how do you do this without a downtime to reconfigure your server certificates?

Therefore I’ve developed a proxy based on Envoy that automatically detects when the secret in the file system has changed and performs a hot reload Vault-CRD-Proxy. It spawns a new process with the new certificates for encryption and moves all existing connections to the new process. The old process is then automatically shut down.

The setup is very simple again. First we create the server-tls Vault resource. To allow other applications to access our application inside the cluster via the service the certificate should contain not only the name of the service as Common-Name, it should also contain the namespace where the application is running inside. So other applications can access the server via the url https://application.default:8443

apiVersion: koudingspawn.de/v1

kind: Vault

metadata:

name: server-tls

spec:

path: "testpki/issue/testrole"

pkiConfiguration:

altNames: application.default

commonName: application

type: PKI

Now we create the deployment with a Vault-CRD-Proxy in front of it:

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

...

spec:

...

spec:

...

containers:

- ...

- name: proxy

env:

- name: TARGET_PORT

value: "8080"

image: daspawnw/vault-crd-proxy:latest

readinessProbe:

failureThreshold: 3

httpGet:

path: /envoy-health-check

port: 8443

scheme: HTTPS

initialDelaySeconds: 5

periodSeconds: 10

successThreshold: 1

timeoutSeconds: 1

ports:

- containerPort: 8443

name: https-proxy

protocol: TCP

volumeMounts:

- mountPath: /etc/ssl/private

name: server-tls

volumes:

- name: server-tls

secret:

defaultMode: 420

secretName: server-tls

Now the application starts and in front of it the Vault-CRD-Proxy runs for TLS termination. When Vault-CRD refreshes the certificate Envoy performs a hot reload to update the used Certificate.

When we create as last step our Service everything is done to make our application accessible to the outside via an encrypted channel:

apiVersion: v1

kind: Service

metadata:

name: application

spec:

selector:

app: application

ports:

- protocol: TCP

port: 8443

targetPort: 8443

Another application that tries to access now the Service must trust our self signed root certificate. If we are using a Java application then the certificate must be imported into the cacerts store inside the java directory:

keytool -importcert -alias self-signed-root -keystore /usr/lib/jvm/java-1.8-openjdk/jre/lib/security/cacerts -storepass changeit -file rootca.pem

If we are using a go application it must be placed inside the ca-certificates.crt file in the /etc/ssl/certs directory:

cat rootca.pem >> /etc/ssl/certs/ca-certificates.crt